Big Brains Podcast: Fighting back against AI piracy, with Ben Zhao and Heather Zheng

https://big-brains.simplecast.com/episodes/helping-artists-fight-back-against-ai-piracy-with-ben-zhao-and-heather-zheng-NvIPm5FL

Show Notes

If you’ve spent any time playing with modern AI image generators, it can seem like an almost magical experience; but the truth is these programs are more like a magic trick than magic. Without the human-generated art of hundreds of thousands of people, these programs wouldn’t work. But those artists are not getting compensated, in fact many of them are being put out of business by the very programs their work helped create.

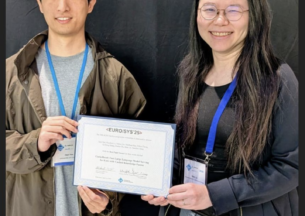

Now, two computer scientists from the University of Chicago, Ben Zhao and Heather Zheng, are fighting back. They’ve developed two programs, called Glaze and Nightshade, which create a type of “poison pill” to help protect against generative AI tools like Midjourney and DALL-E, helping artists protect their copyrighted, original work. Their work may also revolutionize all of our relationships to these systems.

Subscribe to Big Brains on Apple Podcasts and Spotify.

(Episode published August 8, 2024)

Transcript:

Paul Rand: If you’ve spent any time playing with modern AI image generators like Dall-E or Midjourney, it can almost seem like magic.

Tape: Programs that generate art using AI are widely available to the public and are skyrocketing in popularity. But what goes into these programs and the work that comes out are heavily debated in the arts community.

Paul Rand: The truth is that these programs are more like a magic trick than magic.

Ben Zhao: To be honest, the hype around intelligence is really overblown.

Paul Rand: That’s Ben Zhao, a Professor of Computer Science at the University of Chicago.

Ben Zhao: It is a technology that would be absolutely impossible without the work product of hundreds of thousands, in some cases, millions of human creatives.

Paul Rand: So it’s not a singular thing that is generating that image for you, so much as it’s a team of human beings creating it. Less like a writer, and more like a writer’s room. And as we think about how the future of AI can be equitable, that distinction is really important because the writers in the room should be getting paid for their work.

Ben Zhao: Today’s internet is extremely one-sided in terms of this power dynamic. AI companies can train on whatever they want, and none of us have really any way to defend ourselves. Opt-out lists are this mirage of consent, but really when you think about it, companies literally do not have to respect their own opt-outs, and we would have no recourse in proving that they violated them because there’s no way technically to prove that something has been trained into a model. But more importantly, smaller companies who aren’t in the public eye literally do not have to opt out anything at all, and they could just scrape and you wouldn’t know it, and you have absolutely no say in it.

Paul Rand: That devaluation of the human component of AI is wreaking havoc on industries already, especially artists.

Ben Zhao: Ever since generative AI has arrived, these aspiring artists are deserting, are abandoning the industry in record numbers. They are doing it at such a rate that many art colleges have been forced to basically shrink by closing departments basically on the edge of existential survival mode for many of these places.

Paul Rand: But it’s not just artists who should be concerned.

Heather Zheng: People seems to do not understand what’s really happening.

Paul Rand: That’s Heather Zheng, a computer scientist at the University of Chicago who also happens to be married to Zhao.

Heather Zheng: Everybody’s going to suffer the same thing, whether it’s text, whether it’s art, whether it’s our voice, whether it is of movement, whether it is choreography, what everything you have, everything is going to the same path.

Paul Rand: But this dynamic duo is starting to fight back. In the last year, they’ve developed two programs designed to protect the human work that goes into building these AIs.

Tape: This defensive tool is called Nightshade.

They call it Glaze.

For artists like Ortiz, it offers protection. It might be impossible to stop AI models from scraping her work, but with she can mess up the way they see it.

Paul Rand: Nightshade and Glaze give artists, and maybe soon all of us, a way to keep the human work that built these AIs in focus.

Ben Zhao: What we needed was something technical to push back, and that’s what Nightshade is for, is to give a little bit of teeth to copyright in the wild and allow content owners in general to resist this idea that whatever you put online is anyone else’s training fodder.

Heather Zheng: It’s been a lot of work, a lot of stress, but I think it’s very worthwhile as well.

Paul Rand: Welcome to Big Brains where we translate the biggest ideas and complex discoveries into digestible brain food. Big brains, little bites from the University of Chicago Podcast Network. I’m your host, Paul Rand. On today’s episode, fighting back against AI data theft.

Are you endlessly curious? The UChicago Graham School has the courses you need to feed your mind. Starting this September, the school is offering a timely course on truth and disinformation in media coverage all in the lead up to the 2024 elections. It’s an opportunity for those who want to understand the complex dynamics at play in today’s media landscape and how it may influence elections. Discover all our offerings and find your perfect course at graham.uchicago.edu.

You are both scientists, and serious scientists are using AI, working on some of the biggest problems facing the world, being where you are at the University of Chicago and you’re seeing all that serious science around you, how did you guys wake up and say, “You know something? What really gets me hot under the collar is all the artists getting ripped off, we’re going to do something about that.” How did you come to that point?

Ben Zhao: I don’t think we expected to work with artists. Neither of us have a strong background in art. I can say that I appreciate art, good art when it’s in front of me, but that’s really about it. Heather, do you want to tell the story?

Heather Zheng: A few years ago, Ben probably talked about Fawkes, which is the tool we developed, human identity, face identity from being used by the classifier to prevent facial recognition model against users. Some artists email us from a mailing group saying that, “Can you do this for art?” So initially, we do not understand, why? What’s going on in this place? Is there a completely different problem? And then they invited us, it’s called the gathering or community town hall by artists. They were talking about these issues of their art being utilized by the generative model without consent. We actually participated in the town hall listening to the conversation, which got us to know more about what this is doing to the artists, what they are suffering, what’s the general impact, and why this is happening to them. I remember vividly, I was cooking because I had to cook dinner at the six o’clock time I was listening to that, was shocking to me to learn all these things happening to the artist.

Ben Zhao: The problem is that when you allow for this to happen without consent, without compensation, it destroys industries to put it bluntly. Put yourself in the shoes of inspiring artists, and you say you look up to some of these amazing, there are heroes and mentors who are 20, 30 years ahead of them, who have great records, who have done amazing things with movie studios, with classical paintings, and whether it’s galleries or popular art online, whatever. You look at all of them and you say, “Well, gosh, that person just got their entire portfolio sucked into a model overnight without any warning, without any consent or knowledge or compensation. That’s it.” In a way, their career is in some respects over.

Paul Rand: Right.

Ben Zhao: Because now anyone can take their skill and take their 20 years, 30 years of experience and wear it like a skin and do whatever they want to without permission. Whether it’s generating offensive subjects, or generating copycat art that now they can sell as real art belonging to the original artist and take that money from the artist. All that is now possible, and that artist has no say in the matter whatsoever. And so no aspiring young creative wants to be a part of that, right?

Art itself is already not exactly a wealthy profession. Many of these people do it because this is their passion. They suffer through or they deal with lower income, and many of them are in survival mode because this is what they’d love to do. But on top of that, to have this existential risk constantly looming over them on a daily basis that any single day now they could be sucked into a model and their career is effectively over. I don’t think any young person wants to choose to go into a career like that. And that is the biggest risk, is that when AI and these models do this, it really destroys the industry from the inside out.

Paul Rand: Well, you guys have told the story of Kelly McKernan, and I wonder if you could just share that because it does a nice idea of bringing this to life a little bit.

Ben Zhao: Kelly is someone who’s been your typical prototypical artist if you will. She started drawing, and we have images that she shared with us of her drawing chalk on a sidewalk when she was six years old and drawing really beautifully. And then just the years and decades of dedication and progression. You can see it in her art progressing really creatively, but gaining technique and skill and in all those things until she’s entering galleries, becoming a well-known independent artist, all these things after 20, 30 years. And then one day she discovers she is now part of LAION 5B. Very soon after, she notices that when you go on Midjourney, people are actually using her name as a prompt. She is offered as one of the original 50, I think name prompt styles that Midjourney put out there was like, “Hey, if you would like to sample some art styles, these are names that you can use and draw in their style.”

And that is the epitome of having your life’s work become someone else’s skin to wear at their leisure. And from there on out, she rapidly saw her income deteriorate, and she is now struggling to pay rent. She is struggling to cover expenses for her family. All that just in the span of a year going from artists who is really comfortable and well-known and not having any trouble financially to all of a sudden literally staring at rent checks in the face and worrying about them on a daily basis. And this is very typical. I mean, technology is supposed to serve man, and not the other way around. And maybe it’s too much sci-fi movies and too many Matrix movies, but I think somewhere along the way, some people actually believe that technology is more important than humankind and that’s a point of disagreement that oftentimes comes up.

Paul Rand: So you guys are on a Zoom call with 500 people. You’re listening to this, you’re getting matter and matter about listening to this, and then you look at each other and say, “Darn it, we’re going to do something here.” Is that how this all went? And then you went off to the lab and came out with Glaze?

Ben Zhao: Pretty much, I mean.

Paul Rand: Okay.

Ben Zhao: I think we look back, and we look at ourselves. We’re even surprised by how many perfect factors had to be in place for a Glaze to happen.

Paul Rand: How were programs like Glaze helping artists to protect their work? What is Nightshade, and how could it change our relationship to these AI companies? That’s after the break.

If you’re enjoying the discussions we’re having on this show, there’s another University of Chicago podcast network show you should check out. It’s called the Chicago Booth Review Podcast. What’s the best way to deliver negative feedback? How can you use AI to improve your business strategy, and why is achieving a soft landing so hard? The Chicago Booth Review podcast addresses the big questions in business, policy, and markets with insights from some of the world’s leading academic researchers. It’s groundbreaking research in a clear and straightforward way. Find the Chicago Booth Review podcast wherever you get your podcasts.

Explain for us just to get into this, what is Glaze? Let’s make sure we give everybody an understanding of that.

Ben Zhao: Sure. So Glaze is basically a tool that you can apply to images of art in such a way that after it’s done processing that image, the image looks pretty much the same as it did before as the original, in terms of how we see it, how human eyes see it. But what it has done is that it has shifted the image in very subtle ways so that when AI models look at it, it will see something stylistically very different. And when AI tries to mimic certain an artist, for example, it’ll do so badly because it’ll imagine styles that aren’t present in the actual painting and any attempts at mimicry will just fail and sell badly.

Heather Zheng: We make it completely offline. They can just download the app, and then run it locally. We’re not going to see any of their data, or up to our server. I have to also say it takes a lot of effort for our student who are designing and testing these models to be able to carry such huge responsibilities, and work hours and time around to make sure that we can actually give this to artists and make it free for them. We’re not claiming, always not to get any money, but just make it free so that they can actually work on this. But I think another perspective that this is we’re trying to also tell artists that there are people who are trying to help them who care about these, and who work on the technology side can actually help them. We’re always trying to help user who’s the weakest part in always dealing with every technology as well.

Ben Zhao: We’ve been mostly working in the visual space, but other folks who have followed some of our work have actually extended it, or I should say, they build similar systems with similar ideas in other domains. There’s a system coming out of Washington University of St. Louis that effectively is like lays for the human voice. Their goal is to protect voice actors from voice mimicry models. And that stuff is also extremely advanced, and it’s being used quite a bit, so a lot of times we talk to voice actors who basically say, “I was walking around one day and all of a sudden I hear this ad that really sounds like me. Everybody thinks it sounds like me, but I certainly didn’t do that particular ad.”

Paul Rand: Oh, gosh.

Ben Zhao: And of course, we saw that just recently with the whole Scarlett Johansson thing.

Tape: Actress Scarlett Johansson says the company OpenAI used an AI generated version of her voice that sounds eerily familiar to hers, and she wants them to stop it right now. The company recently unveiled its latest AI model, GPT-4o featuring an audio interacting persona named Sky. Johansson says the company developed the voice without her permission after she declined to offer to provide her actual voice.

Ben Zhao: That’s exactly what that is, voice mimicry models at work.

Paul Rand: Now after you’ve got Glaze up and running, then you decided that there was room for another tool out there, and it’s a tool that you’ve called Nightshade. And I wonder if you can give us some background on the story of Nightshade and what it does.

Ben Zhao: When we were looking at protecting individual artists from mimicry, one thing that we realized was that putting the onus on individual artists to protect themselves was just not going to be enough. There’s hundreds of thousands, perhaps millions of artists around the world, and most of them will not hear about this product. They will not know how to use it, they will lack compute to do things like this. But even beyond individual artists for anybody else, companies, gaming studios, movie studios, musicians, all these different human creators out there who’ve created something great, they have absolutely no protection against AI models who want to train on their content.

Effectively speaking, very similar things as to what Glaze does, except Glaze modifies art styles and Nightshade effectively modifies the image composition. A photo of a cow in a green pasture, for example, once it gets Nightshaded, to the AI model, what it doesn’t see is a cow. What it does see is a beat up 1960s automobile sitting in a green pasture. This is the surprising part is that it doesn’t take a lot of these images. Despite these models being trained on hundreds of millions of images, Nightshade can take effect if you’ve got let’s say 100 or 200 of these images that have been processed the right way.

And so 200 images of a cow that turn into 200 images of automobiles will be enough to convince the model that no, no, you are actually wrong, and what you think of as a cow is actually incorrect. And a cow has four wheels and a bumper and a fender and a nice flat trunk. From there on out, what will happen is that when someone prompts that model and says, “I would like a nice cow with a rider on top and a cowboy hat,” what you’ll get is some guy with a cowboy hat sitting on the hood of a old 1960s car. The more that we can disrupt that, of course, the greater the disruption to these models, and that’s certainly not what these AI companies are trying to market.

Paul Rand: Do you think, and my understanding also is that once an image is been uploaded, then it’s out in the public domain, and you cannot go back and re-protect it. This is really going to apply to new images and new art and new creations, is that accurate?

Ben Zhao: I would say that’s not quite true. What is fundamental about the way that these models, and these AI companies work is that they must produce better models. They must produce yet another update within the six months, or else they’re VC funders or whoever’s backing them with billions of dollars will basically walk away and lose interest. And so for them, it is all about finding anything that is different. That means when you go online and you take that web page that you’ve put up five, six years ago, and you delete it and you replace it with, for example, Glazed or Nightshaded images, that content will look fresh to the AI models. That content will be scraped into their data sets and whatever it is that you do to those images will get into that pipeline. But the reality is that in terms of large-language models in general, they are plateauing in their ability to offer correct and sound information.

Paul Rand: They’re plateauing because why, Ben?

Ben Zhao: Well, they’re plateauing because as we oftentimes say in computer science, getting the first 80% of something problem working correctly is relatively easier than getting that last few bits. They’ve gotten the simple stuff extracted out of data and text, but now getting that last 5%, that last 10% of correctness is going to be extremely difficult. There’s research papers that basically show that to get an increasing every little incremental level of accuracy, they have to retrain with 3X, 5X, 10X more training data than they’ve ever done before. And simply put, there’s just not that much data in the world.

What that means is really these models are reaching their peak in terms of what they can do, and fundamentally their prediction machines that are going to hallucinate because that is the nature of what they’re designed to do. These fundamental limitations I think are going to really kick in. And once people realize that this is not something that you’re going to fix in six months because it’s been whatever, two years, then people will truly understand their limitations, and they’ll adjust their expectations for how and where to use this type of technology. And then I think we’ll all be better off without this hype.

Heather Zheng: I just wanted to add, I think just on top of what Ben says, Nightshade’s view, I view this as a collective copyright protection. It’s need to achieve at the scale, so if all the content contributors, creators, whatever put online, if they put on these on their post images and contents, collectively, they’re able to build together as a big force for the copyright protection. The purpose of Nightshade is not to just say nobody can actually train any generative model. It’s more about you should train these. If these data are copyrighted, you should respect that copyright, and give consent and then purchase the data.

If the data is Nightshaded, if you don’t remove it from the training data set, then the model is going to suffer. It will take a long time or overhead to actually remove them from the model by scanning them. So ideally, just increase the cost for the company who’s training these models to deal with these copyright data. The cost is high, so it’s actually cheaper for them or more economic for them just to pay for these contents themselves. We see this as a more like a copyright enforcer rather than just wait just to destroy the model itself. If you use the data ethically, your model is fine.

Paul Rand: Heather, do you get love notes from artists every day thanking you for what you’re working on?

Heather Zheng: Yeah, this happens, and we also belong to many, some of the [inaudible 00:20:27] of the tool we developed. Just seeing them every day, it just give, sometimes they send us letters, they send the arts, and they’re just very happy to see that our tool can offer a certain level of hope for them. I think they see these are helping them immediately give them hope, and allow them to continue to post their artwork online. But at the same time, it also gave them awareness of everyone, including not only the artists but the general public about what these general model do to them or creative industry, but also give them time and efforts to do more of law and policy changes.

Ben Zhao: We are seeing actual progress in not only just the courts, I think the courts have their own legal battles and those are progressing, but we’re actually seeing progress in regulatory and legal venues if you will. So we’re actually seeing the Elvis Act from Tennessee.

Tape: Tennessee is the first state in the entire country to protect its musicians from AI deepfakes.

The governor says the goal is to ensure that AI tools cannot replicate an artist’s voice without their consent. It goes into effect July 1st. Hopefully they do that for newscasters.

Ben Zhao: We were excited to be involved in some of these efforts. I testified in front of the Illinois state legislature in November of 2023. Similar bills are in effect and in progress in both New York and California. And so in some ways, this is surprising because we always think the regulatory and the laws work super slowly, but this is such an important area. I think a lot of people are really trying their best. A lot of these local bills are surfacing, and there is discussion at the federal level about bills being proposed and discussed both in the Senate and in the House. Maybe in a couple of years we’ll just talk about the impact of some of these laws and enforcing them, and that’s really exciting to see. As Heather said, I think one of the things we’d love to be able to say is we don’t have to work on Nightshade anymore, or Glaze anymore because that’s been completely made less relevant, less necessary by all of these legal protections that we have in place now. That will be awesome.

Paul Rand: Now let’s talk about the companies like a Midjourney. Here they are thinking, man, I can go into the store, take whatever I want for free. Nobody questions me, I’m building up my business. This is the greatest business model in the whole world, and all of a sudden, they now have a cow that looks like a car and they have to start all over again. Ben, what are they saying to you about this? I imagine they’re not too happy.

Ben Zhao: As you might expect, there’s not a lot of public discourse in conversation in the public eye that would be, from a legal perspective, incredibly shortsighted and ill wise for them to lash out at us, for example. I will just say that I’ve engaged in communications with some of them, some former employees of some of these companies, some current employees of larger tech companies, and in general, they’re not terribly happy.

Some surprisingly, many I would say actually, many folks at these large tech companies agree with us. They understand the need to protect human creativity. They are actually excited about the development of some of our tools, and so that’s been really surprising is that I’ve given talk to some of these AI companies and with really positive responses because it turns out many of these employees are not exactly in sync with perhaps some of their decisions that are made by their leadership. Some of them don’t have a way to dissent, and they don’t have a way to speak out against their own employers obviously for personal reasons, but they can express excitement and they can express support for all the things that we’re doing.

Paul Rand: How long, Ben, until we’re finding that the companies are, “Well, that was a great run. I got stuff for free. I built my model. Those are the good old days, and now I’ve got to pay for this stuff.” When do you think we’re going to be in that phase where we are going to turn the tide and in essence, look back and say, “We have a lost generation that didn’t go to art school or didn’t learn how to write, but maybe we can clean it up and move on the right path?” Is that even an option?

Ben Zhao: Well, I mean, I think you’re talking about two perhaps events there, right? One is licensing, and when we can get pervasive licensing, and another is this backward looking experience recognizing that what the damage that we’ve done to entire generation of creativity. Hopefully, the second one does not come to pass. The first one in terms of licensing, I mean honestly, I think it’s happening now. If you look at the news, you see a lot of different websites and content creators entering licensing agreements with AI companies. Now, I’m not excited about that right now because I think what’s going on is that a lot of these content creators, particularly smaller websites and smaller companies, are basically being coerced into AI training, negotiations, and deals where they don’t have the negotiating power. Because the prevailing message out of media and the hype is that either you allow us to train on your data, and we pay you some nominal fee, and usually it’s quite small or we do it anyway and you get nothing.

And I think that is what a lot of these companies consider there to be their main choices right now, as opposed to a slightly different future where I think the pressure is on the AI companies because they realize that a lot of their training data when they scrape it, is in fact tainted or poisoned or whatever, has been modified to not cooperate with their AI models. Then the onus comes on them to actually ensure that they have to have clean data. And then I think the negotiating table will be much more balanced, and hopefully that will become apparent to the content owners and creators so that they can actually negotiate much better terms than what they’re getting today.

Paul Rand: Heather, I’m going to come back and talk to you guys in two or three years. What are we going to be talking about?

Heather Zheng: Oh, gosh. My hope is, “Are you still working on Nightshade and Glaze?” My answer is there’s no need for that anymore. We’re moving on something different or something that need to take care of. That will be my dream answer. Hopefully, we’re not going to say we’re still on this problem.

Paul Rand: That’d be great. All right, so that’s what we hope we’re not talking about. Ben, what do we hope we are going to be talking about?

Ben Zhao: That is a great question. I would hope that we would be talking about advances in robotics. I would hope that we would be talking about the type of AI that I grew up watching and hoping for that entire generations grew up hoping for, that of robots and doing all the things that we did not want to do. The physical labor, the dangerous tasks that humans do not want to really take on, we want advances in those fields. We want advances so that in the dangerous jobs, people can instead sit in the comfort of their own home and control remotely these robots that do extremely difficult and backbreaking jobs. So if we can reverse that dichotomy, if we can flip that around so that AI is really working for humanity instead of trying to make humanity work for it, I think that would be the dream.

Matt Hodapp: Big Brains is a production of the University of Chicago Podcast Network. We’re sponsored by the Graham School. Are you a lifelong learner with an insatiable curiosity? Access more than 50 open enrollment courses every quarter. Learn more at graham.uchicago.edu/bigbrains. If you like what you heard on our podcast, please leave us a rating and review. The show is hosted by Paul M. Rand, and produced by Lea Ceasrine, and me, Matt Hodapp. Thanks for listening.