Globus Reaches One Exabyte Milestone in Research Data Management

Data is the lifeblood of research, and in order to keep science progressing, it must flow. Since 1997, Globus — a project founded by UChicago CS Professor Ian Foster, Steve Tuecke, and Carl Kesselman — has helped scientists manage their research data by moving, sharing, and publishing increasingly large datasets through user-friendly software. This week, the project passed a milestone that emphasizes just how large its contribution to science has been, reaching 1 exabyte of data transferred.

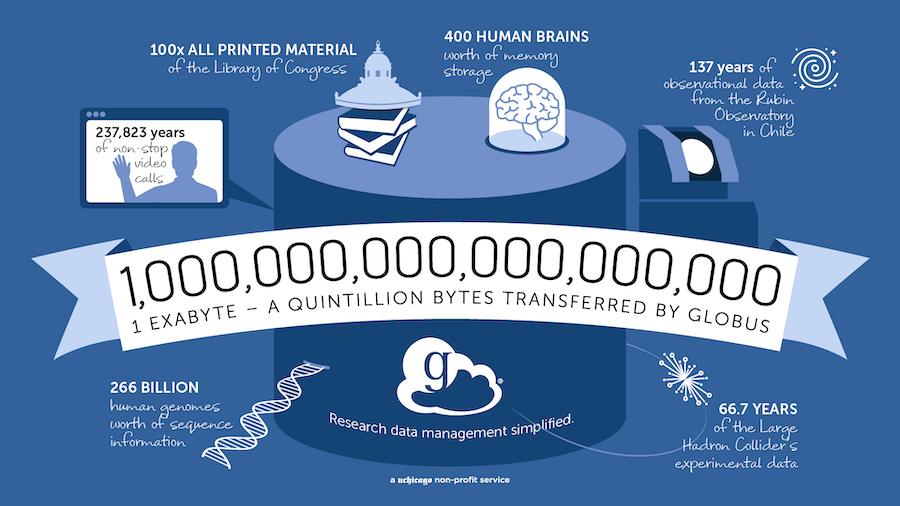

An exabyte (EB) is a billion billion bytes, an almost unfathomable amount of data. According to Globus, it would take a person on a continuous video call 237,823 years to transfer 1EB, or a Netflix subscriber 3.5 billion years of watching movies at their current rate to surpass the 1EB mark.

Yet, exabytes will soon be a routine unit of measurement for science, between the dawn of exascale computing and the proliferation of artificial intelligence, Internet of Things devices, and scientific instruments such as genomic sequencers. Analysts estimate that 59 zettabytes — which equals 59,000 exabytes of data — will be created, captured, copied and consumed in 2020

“It is such an exciting time for the research community,” said Foster, the Arthur Holly Compton Distinguished Service Professor of Computer Science at UChicago and Distinguished Fellow and Senior Scientist at Argonne National Laboratory. “The massive data volumes being collected, analyzed, and shared today will enable us to address and solve some of the world’s most challenging problems, from discovering new vaccines to tackling climate change and uncovering some of the mysteries of the Universe.”

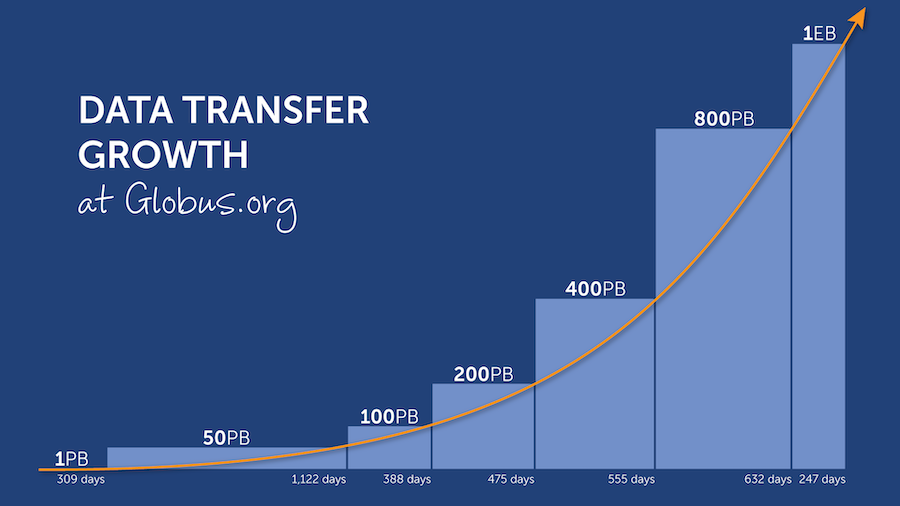

The accelerating rate of scientific data is aptly demonstrated by the journey of Globus from 2010 to its first exabyte. While it took over 2,000 days for the service to transfer the first 200 petabytes (PB) of data, the last 200PB were moved in just 247 days. All told, 150,000 registered users have now transferred over 120 billion files using Globus.

Those billions of bytes include data from cosmological simulations, experiments on the Advanced Photon Source at Argonne, 3D brain reconstructions, and end-to-end genetic sequence analysis. The big number was also facilitated by services such as Globus Auth and the Globus Platform that enable a wide range of research data management tasks, and integration with national-scale computational infrastructures such as Compute Canada and The National Energy Research Scientific Computing Center (NERSC).

“Our mission at Globus is to make the management of research data as frictionless as possible so researchers can get on with their important work”, said Rachana Ananthakrishnan, Globus executive director. “We are gratified to see the service becoming a critical part of cyberinfrastructure at thousands of leading research institutions around the world.”

For more on Globus, visit globus.org.