UChicago Researchers Receive Google Privacy Faculty Award for Research on AI Privacy Risks

The University of Chicago’s Neubauer Professor of Computer Science, Nick Feamster, has been awarded the prestigious Google Privacy Faculty Award. This award will support his research on privacy vulnerabilities in large language models (LLMs) and their third-party integrations, in collaboration with Ph.D. students Synthia Wang and Google researchers Nina Taft and Sai Teja Peddinti. As AI technologies like OpenAI’s GPT ecosystem become increasingly embedded in everyday life, understanding and mitigating privacy risks has grown more urgent. Feamster’s project focuses on uncovering these hidden risks and creating solutions to protect sensitive user data, ensuring trust in AI-powered tools.

“As society increasingly relies on large language models to perform a variety of tasks that require prompting with sensitive or private information, it has become increasingly important to understand how the data that we provide to these models is collected and shared with third parties,” Feamster said. “Many people are likely not even aware that data they provide to a system like ChatGPT could be shared with third parties who provide additional functionality to the model. This project is the first to investigate that in detail.“

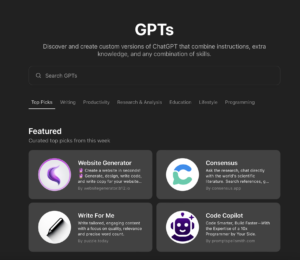

Large language models now enable the development of custom applications, or GPTs, designed to perform specific tasks such as travel planning or financial advising. While these tools provide enhanced functionality by integrating with third-party services, they also introduce potential privacy risks. Users may unknowingly disclose sensitive information like personal details or financial data, with little control over how that information flows between services. Feamster and lead PhD student Synthia Wang will be using OpenAI’s ecosystem as a case study, examining how user data is handled in interactions with custom GPTs and identifying areas where stronger protections are needed.

The project takes a dual approach to understanding these risks. First, the team is conducting a “white-box” analysis by developing their own custom GPTs. These experimental models simulate both benign and potentially malicious behaviors, helping the researchers analyze what types of user information can be accessed by third parties—whether intentionally or inadvertently. The team is also investigating whether tools like GPT wrappers can effectively strip personal information before it’s transmitted, offering a technical safeguard against data leaks.

The project takes a dual approach to understanding these risks. First, the team is conducting a “white-box” analysis by developing their own custom GPTs. These experimental models simulate both benign and potentially malicious behaviors, helping the researchers analyze what types of user information can be accessed by third parties—whether intentionally or inadvertently. The team is also investigating whether tools like GPT wrappers can effectively strip personal information before it’s transmitted, offering a technical safeguard against data leaks.

“The lack of visibility into GPTs working behind the scenes not only raises significant privacy concerns for regular users, but also creates obstacles for conducting accurate and reliable measurements,” cautioned Wang. “Additionally, the broader ecosystem poses challenges due to the lack of standardized vetting processes for GPTs, making it difficult to ensure that malicious or privacy-violating GPTs are detected.“

The second phase of the study focuses on user behavior and perception. Through surveys and controlled experiments, the team is examining whether users are aware of how their data is shared and what privacy risks exist. By exploring how transparency measures and notifications influence user trust, the research aims to identify strategies that empower users to make more informed decisions when interacting with AI.

This work has far-reaching implications for both academia and industry. As platforms like OpenAI’s GPT Store and Google’s Gemini expand their integrations with third-party services, understanding the privacy risks associated with these systems is crucial. Insights from Feamster’s research will inform the development of policies and mechanisms to safeguard user data, ensuring that AI technologies are not only innovative but also trustworthy.

To ensure ethical rigor, the study uses controlled environments with scripted interactions and fake user profiles, eliminating the need for real user data. Institutional Review Board (IRB) approval will be obtained to safeguard participant rights and privacy throughout the research process.

The award continues Feamster’s legacy of impactful privacy research. In 2015, he received a Google Faculty Research Award for uncovering privacy risks in Internet of Things (IoT) devices, a project that spurred nearly a decade of investigations into data security in interconnected systems; he has also led a multi-million dollar industry-wide consortium studying the technical and policy implications of IoT privacy while serving as Director of Princeton’s Center for Information Technology Policy Building on this foundation, his latest work addresses the challenges posed by the next wave of technological innovation—AI-powered platforms.

Feamster’s efforts highlight the critical importance of addressing privacy concerns as AI technologies continue to evolve. His work not only identifies vulnerabilities but also offers actionable solutions to build safer and more transparent systems, setting a high standard for ethical AI development.