Chameleon Cloud Testbed Hosts HPC Competition, Gains New Associates and Users

Chameleon, the experimental, open cloud computing platform based at the University of Chicago and led by UChicago CS CASE affiliate Kate Keahey, has supported computer science systems research since 2015. But the last few months have seen the project continue to grow in new, exciting ways. Through hosting a new competition at the annual Supercomputing conference, adding a new associate site at the National Center for Atmospheric Research (NCAR), and finding new users in agriculture and cybersecurity, Chameleon expands its community and scientific value.

Each year, the Supercomputing (SC) conference draws over 10,000 attendees from the world of high-performance computing for research presentations, panels, tutorials, and competitions. Since 2007, the Student Cluster Competition (SCC) has been among the premier HPC events for undergraduate students, with teams racing over 48 hours to build a cluster to run a test application. At SC 2021, a new offshoot of the contest debuted: IndySCC, fully supported by Chameleon, which expands the competitor field to new schools and reduces barriers to entry via access to cloud computing resources.

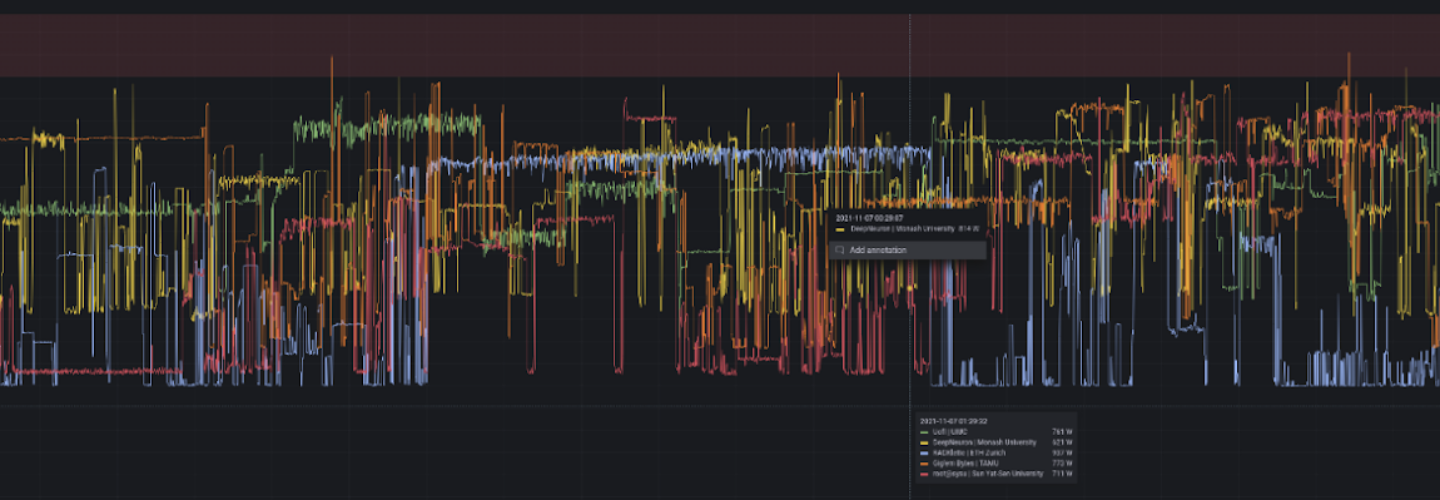

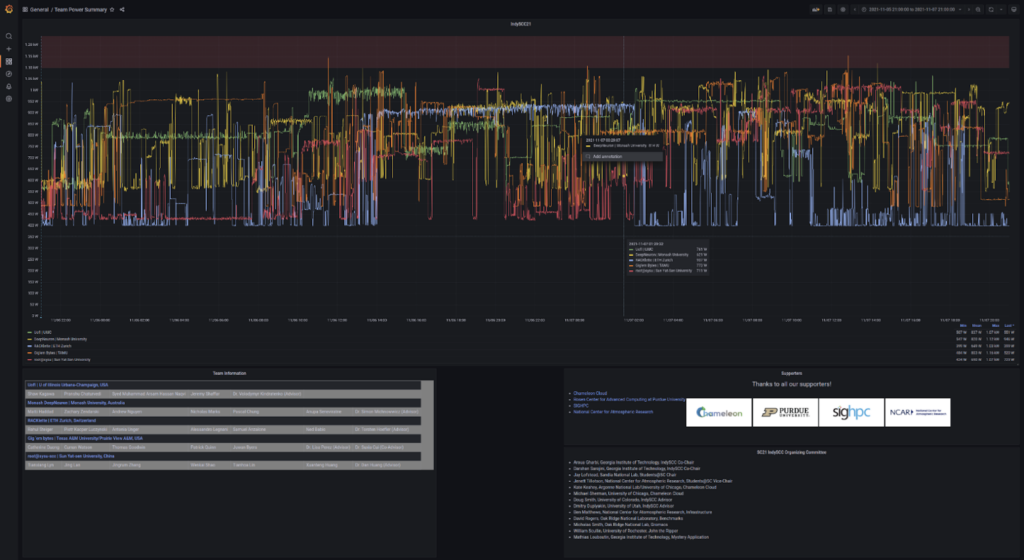

Five teams from the U.S., Switzerland, China, and Australia competed in the inaugural IndySCC competition using the Chameleon testbed to conduct their experiments. The testbed provided the competitors with bare metal access and power measurement on the Chameleon Cloud, and setup support for novice users. Each team used two P100 GPU nodes on Chameleon and an ARM node, provided by NCAR, using the CHameleon Infrastructure (CHI). The competition was won by the team from Sun Yat-Sen University in China.

“We believe that Chameleon provided the teams with a realistic HPC experience when using cloud platforms,” wrote IndySCC co-chairs Darshan Sarojini and Aroua Gharbi in a blog post on the competition.

The co-involvement of Chameleon and NCAR turned into a longer working relationship, as NCAR decided to make the donation of their ARM Thunder X2 nodes permanent and became a Chameleon Associate Site. NCAR is an NSF-funded research center for university climate scientists from across the United States, and the center is home to high-performance computing resources such as the 5.3-petaflop Cheyenne supercomputer. With the new CHI@NCAR site, NCAR contributes a system with 5 ARM ThunderX2 nodes to the Chameleon testbed.

“We felt Chameleon would be a good way to make these machines available to other researchers for evaluation purposes,” said Jenett Tillotson, Senior Systems Engineer for NCAR.

Last month, NCAR was joined by another new associate site: the Electronic Visualization Laboratory (EVL) at the University of Illinois at Chicago. The site will supply 8 Haswell nodes retired from Texas Advanced Computing Center (TACC) with 13 more nodes coming online soon. The addition of NCAR and EVL brings the total number of Chameleon associate sites to five.

Meanwhile, the Chameleon user community expands with scientific groups finding new applications for the system. ARA, a wireless research platform deployed at Iowa State University and surrounding areas for use in precision agriculture on livestock and crop farms, recently deployed the CHI@Edge testbed. The platform assists the ARA project in controlling devices such as drones, automated vehicles, robots, and sensors for high-throughput crop phenotyping, precision livestock farming, agriculture automation and rural education.

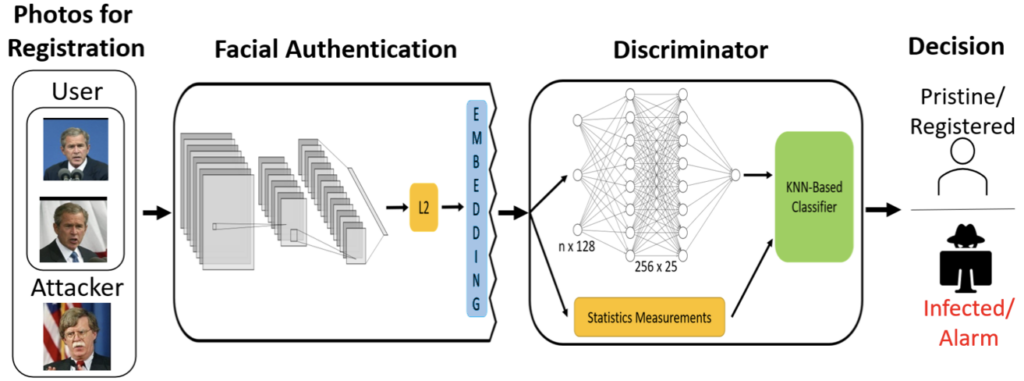

Another Chameleon user group utilized the cloud computing resources to study attacks and protections in facial recognition systems. The group, from the University of Missouri I-Privacy Lab, recreated a form of attack called “replacement data poisoning,” where an adversary replaces photos of a legitimate user on the authentication server with their own photos, allowing them to log in instead. Using Chameleon, the team trained a new kind of model that offers additional defense against this type of exploit.

Using Chameleon allowed the team to save on hardware costs and easily pause and restart experiments over the duration of the project.

“I really appreciated the option to save our data for future experiments on Chameleon,” said UMiss study co-author Dalton Cole. “If we didn’t need hardware for a period of time, we could easily save our experiments and reload them at a later time. We store both images and feature vectors generated from deep neural networks on Chameleon, which are several terabytes. VMs are easy to use. Without Chameleon, our experiments would not be possible because we have limited access to our university’s clusters as they would charge a lot for extensive usage.”

For more stories on Chameleon and its features and applications, visit the Chameleon blog.