Moderation at the Crossroads: How Generative AI Platforms Manage Creativity and Content Safety

As text generators and AI-driven artists become household names, a pressing question emerges: How do these tools decide what’s safe to share—and what isn’t? A recent study led by PhD student Lan Gao and undergraduate students Oscar Chen and Rachel Lee, together with Neubauer Professor Nick Feamster, Associate Professor Chenhao Tan, and Associate Professor Marshini Chetty of the Department of Computer Science, peels back the curtain on content moderation in consumer-facing generative AI products, exploring both the policies written by companies and the lived experiences of everyday users.

From Community Rules to AI Toolkits

Online communities have long balanced the need for open dialogue with safeguards against harmful content. Now, as platforms like ChatGPT, Midjourney, and Gemini churn out creative content for millions, the responsibility for content moderation enters new territory. Unlike message boards where only the uploaded posts are checked, these AI tools must also judge the user instructions they’re given—and the responses they generate.

The research team first analyzed the public policies of 14 popular generative AI tools. They found that while most platforms are diligent about outlining what’s prohibited—ranging from hate speech to copyright violations—the actual guidance is often buried in a maze of terms, FAQs, and support pages. Few spell out exactly how users can report issues, or how they can appeal a moderation decision. In fact, “user-driven moderation” is mentioned far less than in traditional online communities.

Moderation: Successes and Sticking Points

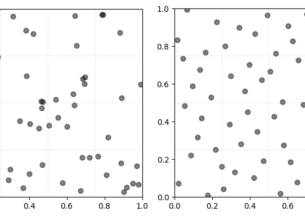

To understand how these policies play out in the real world, the team dove into public Reddit discussions, pulling from over a thousand posts about content moderation in generative AI tools. Their analysis reveals a nuanced landscape:

Successes: Users generally reported that obvious malicious content (think explicit imagery or scams) is blocked effectively—a relief for those concerned about the risks of AI-generated harm.

Frustrations: Innocent creative tasks frequently get tangled in the filters. Fiction writers lamented blocks on violence in historical or fantasy stories; artists hit brick walls over ambiguous word choices. “I just find myself afraid of triggering arbitrary warnings, and then just not using it,” one user reflected.

Inconsistencies also frustrated users. Identical requests could get different moderation outcomes, sometimes seemingly dependent on luck — or the particular account being used. Explanations were often generic, with little insight into what went wrong or how to fix it. Appeals, when available, could move at a glacial pace.

“From what we saw in these discussions, the technical limitations and the opaque disclosures of the moderation systems have hampered the users’ creativity and sense of agency with generative AI tools,” said Gao. “These drawbacks could subtly affect how users approach risks associated with AI tools, and shift user’s personal expressions from their original purpose.”

Why It Matters Beyond the Screen

The implications ripple far beyond hobbyists and tinkerers. In classrooms, journalism, design firms, and beyond, people rely on generative AI tools both for creative inspiration and practical assistance. Overly strict filters and inconsistent enforcement don’t just cause headaches—they risk cutting off valuable new forms of expression and utility.

The study recommends that generative AI platforms unify and clarify their policies, offer meaningful feedback on moderation decisions, and adopt “soft moderation” (such as warnings rather than outright blocks) where possible. Involving users more directly in reporting and appeals could help platforms learn from mistakes and fine-tune their algorithms. Finally, making the moderation rules and processes more transparent—including the list of banned terms and how decisions are made—could demystify the process for users and enhance trust.

“As generative AI has quickly taken off in the last few years, tool makers have had to put guardrails in place to prevent the creation and distribution of malicious content,” said Chetty. “By improving the transparency and effectiveness of content moderation policies in generative AI tools, we can encourage users to satisfy their curiosity while also minimizing harm.“

Looking Ahead

As generative AI becomes ever more woven into daily life, the study’s authors hope these findings will guide both policymakers and product designers. The full study will be presented at the 34th Annual USENIX Security Symposium in August and the related datasets are publicly available for those interested in delving deeper.