Q&A: Ian Foster on Receiving the 2023 IEEE Internet Award

Adapted from an article by Marguerite Huber published by Argonne National Laboratory.

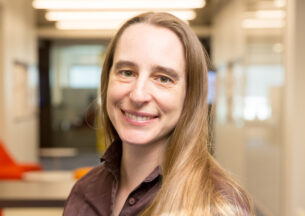

Ian Foster is widely considered the founder of grid computing, the precursor to cloud computing. Foster is the Arthur Holly Compton Distinguished Service Professor of Computer Science at the University of Chicago and the director of the Data Science and Learning division at Argonne National Laboratory. He is also the co-founder of the popular and long-lived data-sharing tool Globus.

Over the course of his career, Foster has been a pioneer in the computer sciences. In the mid-1990s, Foster and Carl Kesselman, a professor at the University of Southern California, created what came to be known as grid computing. It helped meet enormous new needs for computing power and data driven initially by the needs of scientific research. They also created the technologies that laid the groundwork for the multibillion-dollar cloud computing industry.

To recognize his extraordinary achievements, Foster was chosen as the recipient of the 2023 IEEE Internet Award by the Institute of Electrical and Electronics Engineers, along with Kesselman. The award is given for exceptional contributions to the advancement of internet technology for network architecture, mobility and end-use applications. The 2023 Internet award recognizes Foster and Kesselman’s contributions to the design, deployment and application of practical internet scale global computing platforms.

“Receiving the IEEE Internet Award is such an honor,” Foster said. “Seeing the impact our work has had on the computing field and beyond is absolutely incredible and I am fortunate to be receiving this award with Carl Kesselman.”

The influence of Foster’s work runs deep, accelerating scientific discovery in numerous fields. This includes physics, geophysics, biology, biochemistry, chemistry, astronomy and materials science.

In addition to his impact on the sciences, Foster has advised hundreds of students and postdocs through his roles at Argonne and at UChicago. Foster also received the Association for Computing Machinery and IEEE Ken Kennedy Award in 2022, and you can view his honorary lecture at the Supercomputing 22 conference below.

Other awards Foster has received include the IEEE Charles Babbage Award, the Lovelace Medal of the British Computing Society and the Gordon Bell Prize for High Performance Supercomputing. He is an Argonne Distinguished Fellow and a fellow of the American Association for the Advancement of Science, ACM, BCS and IEEE, and a U.S. Department of Energy Office of Science Distinguished Scientists Fellow.

We asked Foster to expand on his role in establishing grid computing, how that set the stage for present-day cloud computing, and what is to come after it.

Q: What is grid computing and how were you involved in its early days? How did this change computing?

A: In today’s information age, computation underpins much of our lives. But where does all that information and computation reside? Some is on our smartphones and laptops, but most is in what we may vaguely think of as the “cloud,” from which we request it as needed — for example, when we want to watch a movie, book a flight or chat with a friend. In other words, computing today can be seen as a fundamental utility, much like electricity (delivered by the power grid).

I was first exposed to the potential of computing as a utility in the early 1990s, when early deployments of high-speed science networks enabled exciting experiments with remote computing. Why, I asked, did we need a computer on every desk, when we could access much faster computers and bigger datasets at remote laboratories?

To realize this vision of a “computing grid,” my group at Argonne and UChicago, along with many partners around the world, developed grid software and standards. Ultimately thousands of research institutions deployed these technologies, including our Globus software, to create regional, national and global grids that were used for many scientific computations.

Q: What is cloud computing? How did grid computing become the predecessor to cloud computing?

A: Fast forward to the 2000s. High-speed networks, previously available only to scientific laboratories, are proliferating. Large commercial data centers have been established by Amazon, Google, Microsoft and others to serve exploding demand for computation as a utility. New industries are leveraging these data centers to deliver new digital services to consumers, from streaming movies to booking travel online. The term “cloud” is used for this latest iteration on the computing as a utility vision, which some describe as “grid with a business model.”

My colleagues and I were quick to embrace the possibilities offered by these commercial cloud computing platforms. We established in 2010, for example, the Globus research data management service that today has more than 300,000 registered users at research laboratories and universities.

Globus, operated as software as a service on the Amazon cloud, allows users to move data rapidly and reliably between their desktops, research facilities, commercial clouds and elsewhere, and to automate the sophisticated data pipelines on which so much of modern science relies — a cloud powered grid, if you will.

Q: What is next after cloud computing?

A: Grid and cloud were each made possible by increasingly widely deployed and capable physical networks — first among scientific laboratories, for grid, and then to homes and businesses, for cloud. But this reliance on physical connections means that these utilities can never be universal.

The next step in the computer revolution will be driven by the emergence of ultrafast wireless networks that will permit access to computing anywhere, anytime, with the only limit being the speed of light.

In this new “computing continuum,” we may compute next to a scientific instrument or at a field site when we need instant response (for example, to interpret observations as they are made), in a commercial cloud when we need reliability and scale, and at a supercomputing facility for specialized scientific computations.

As with grid and cloud, unanticipated new applications will emerge that build on these capabilities in unexpected ways, and new services will be needed to enable their use for science. It’s an exciting time.