New Wearable Device Controls Individual Fingers for Sign Language, Music Applications

Humans use their fingers for some of their most delicate communication. Typing on a keyboard or phone, conversing through sign language, or performing on a guitar or piano require finely-tuned movement and coordination between multiple digits. But many of the technologies intended to control or augment these activities have struggled to recreate the precise gestures and motions they require.

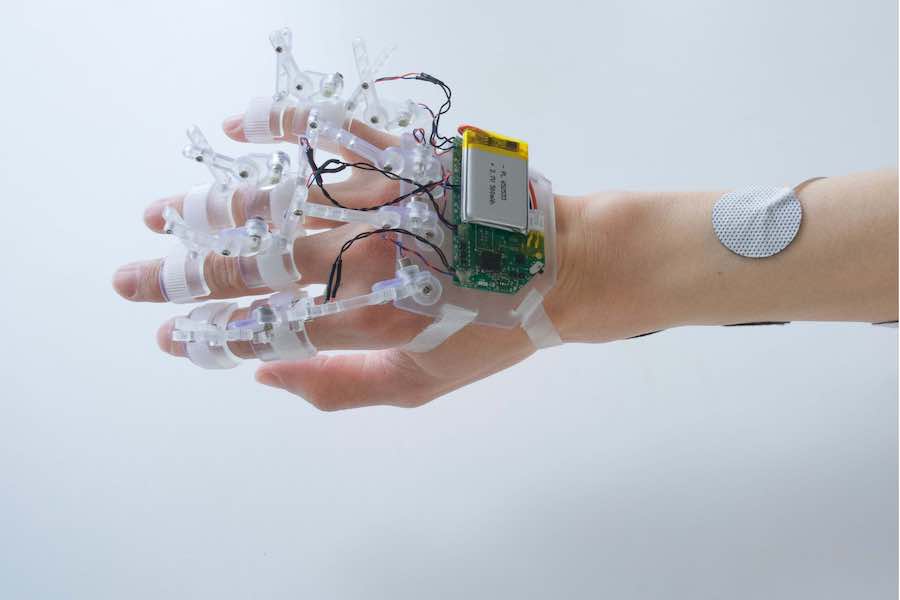

A new solution from the Human Computer Integration Laboratory directed by UChicago CS assistant professor Pedro Lopes solves this problem with a combination of electrical muscle stimulation (EMS) and mechanical brakes. DextrEMS, a haptic device invented and developed by Lopes’ students Romain Nith, Shan-Yuan Teng, Pengyu Li, and Yujie Tao, fits unobtrusively on a user’s hand. As EMS electrodes on the forearm move fingers to their desired position, ratchet brakes at the finger joints lock them in the target gesture and restrict unwanted movement from other fingers.

The work will be presented this week at the 2021 ACM Symposium on User Interface Software and Technology (UIST), one of the top human-computer interaction conferences.

[Update: The research received the Best Demo – People’s Choice Award!]

“DextrEMS is a means to actuate human fingers through a computer,” said Nith, a 2nd-year PhD student in the Department of Computer Science. “It’s the same idea as a traditional exoskeleton, where we are providing force feedback to the fingers, but we wanted to tackle it through the perspective of miniaturizing everything. By combining EMS and brakes, we can actually create movements that weren’t possible before and users can move fingers independently.”

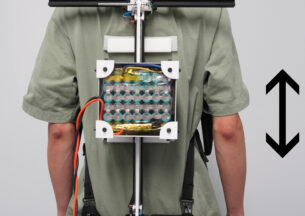

EMS works by affixing electrodes to the user’s skin, then sending small, painless electrical signals that cause muscles to contract. For controlling the fingers, EMS electrodes are commonly placed on the wrist and forearm, where the finger muscles originate. But because of this distance and the close proximity of muscles for different fingers, it’s hard to control digits independently. Even natural motion, controlled by the brain, struggles to move a single finger without also moving its neighbors (try it!).

Another limitation of EMS is the difficulty of holding a finger in the desired position after the initial motion. To do so electrically requires balancing signals between muscles that control opposite actions of contraction and extension. But even the best EMS systems struggle to maintain a balance between these muscles, producing a quiver.

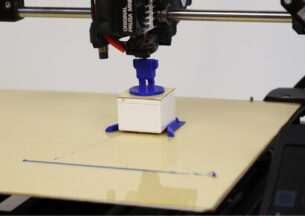

Nith and his colleagues solved this problem by adding 3d-printed, clear resin brakes to EMS. Working somewhat like the parking brake on a car, the mechanisms resemble tiny gears with a movable lever that can lock it in place at the desired angle. The brakes are small enough that they don’t interfere with the movement of adjacent fingers, strong enough to resist normal finger muscle contraction, and nimble enough to adjust shape rapidly. Two such brakes are placed on each finger, above the joints at the base and middle of the digit.

“One advantage of this design is that a lot of exoskeletons today just create a shape and lock it in place,” Nith said. “But ours react as the environment changes. So in virtual applications such as a whack-a-mole game or a bouldering simulation, we can create motion where you actually feel a virtual object pushing against your finger.”

Virtual and augmented reality is just one application of the technology. In a video demo, Nith demonstrates how the device could be used to communicate through sign language, even if the user is not familiar with the language’s gestures. A phone app — also developed by the Human Computer Integration Lab — uses computer vision to recognize sign language and translate it into readable text. Then the user types in their response, and the app sends EMS signals to the DextrEMS device to help them spell out the correct signs. While the device cannot yet do finger-crossing and other subtle gestures involved in sign language, it’s the first step towards a full multi-modal translator.

The DextrEMS technology also shows promise as a tool for helping users play instruments, such as piano or guitar. By locking the hand into different configurations for playing chords, the wearer can directly experience the proper technique during a tutorial — which may possibly even improve their playing ability after they take the device off.

“Now that we have DextrEMS, we can make a computer guide users to perform fine-motor skills we never thought possible,” Lopes said. “This will allow us to extend our previous work in EMS, in which we showed that EMS is beneficial in acquiring faster reaction time, even after you remove the electrodes. Now, with DextrEMS, we hope to understand if can computers assist users in much more realistic tasks beyond simple reaction time tests”.

The paper describing the research, “DextrEMS: Increasing Dexterity in Electrical Muscle Stimulation by Combining it with Brakes,” is one of three papers from the Human Computer Integration Lab appearing at UIST 2021. You can watch Nith’s full UIST presentation below.