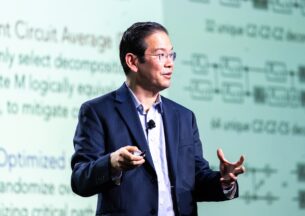

Tengyu Ma (Stanford) - Designing Explicit Regularizers for Deep Models

Designing Explicit Regularizers for Deep Models

I will discuss some recent results on designing explicit regularizers to improve the generalization performances of deep neural networks. We derive data-dependent generalization bounds for deep neural networks. We empirically regularize the bounds and obtain improved generalization performance (in terms of the standard accuracy or the robust accuracy). I will also touch on recent results on applying these techniques to imbalanced datasets.

Based on joint work with Colin Wei, Kaidi Cao, Adrien Gaidon, and Nikos Arechiga

Host: Nati Srebro

Tengyu Ma

Tengyu Ma is an assistant professor of Computer Science and Statistics at Stanford University. He received his Ph.D. from Princeton University and B.E. from Tsinghua University. His research interests include topics in machine learning and algorithms, such as deep learning and its theory, non-convex optimization, deep reinforcement learning, representation learning, and high-dimensional statistics. He is a recipient of NIPS’16 best student paper award, COLT’18 best paper award, and ACM Doctoral Dissertation Award Honorable Mention.