Exploring 3D Paintbrush: An AI That Colors with Words

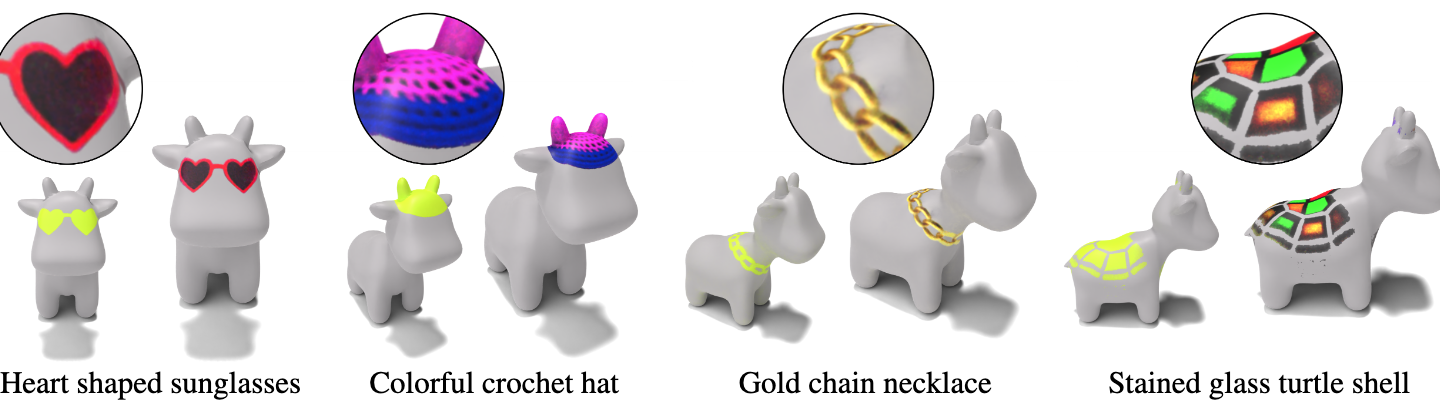

Imagine a rabbit wearing a camo poncho, or a cow with a chain necklace. Easy, right? As humans, we have no problem using our creativity to visualize things that do not exist because of our inherent understanding of the object. The same hasn’t been so easy for a computer to do. So far, a lot has been accomplished to alter the texture and appearance of an object in its entirety, such as changing the color of the cow or morphing the cow into a pig. However, little has been done to address the generation of detailed stylistic edits on a highly specific region within the object, confining the location of the edit using nothing more than textual prompts. To address this problem, second-year PhD student Dale Decatur and a team of researchers from assistant professor Rana Hanocka’s 3DL lab at the University of Chicago Department of Computer Science have created a technique called 3D Paintbrush.

“It turns out, that’s actually a pretty hard problem,” said Decatur, who developed the project. “What if we could just use text to describe some part and get an explicit segmentation of that part itself in 3D? That was how the first project started out.”

In the paper titled, “3D Paintbrush: Local Stylization of 3D Shapes with Cascaded Score Distillation,” the researchers introduce a new technique called Cascaded Score Distillation (CSD) that enables local, text-driven, high resolution editing. They observed that pre-trained 2D cascaded models — which the 3D model is built upon — possess different stages that each contain different levels of resolution and global understanding.

“Existing methods only use the first stage of the multi-stage supervision model,” Decatur stated. “I was actually surprised. Why not leverage the power of multiple stages? Because we use multiple stages, higher resolution stages have the ability to provide higher resolution supervision.” CSD, therefore, distills multiple stages to produce a high resolution local texture on an existing object.

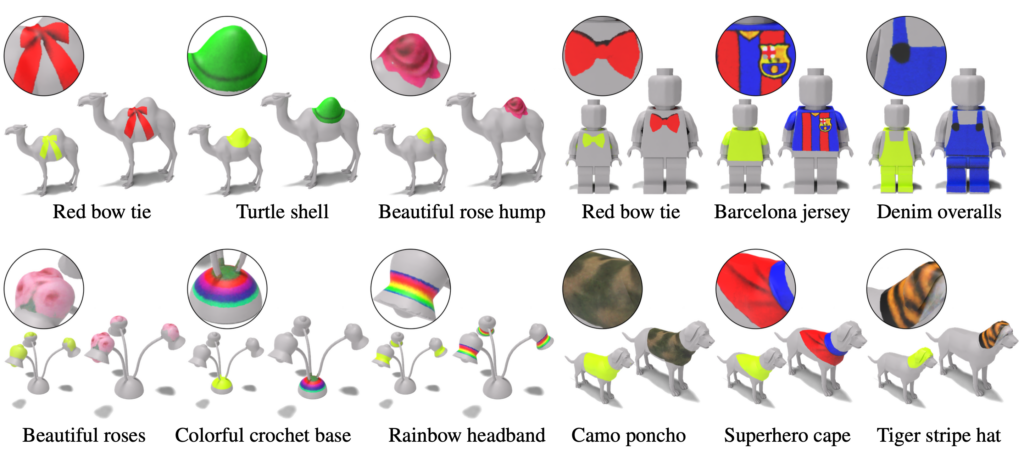

Key to the method, the localization and stylization are learned simultaneously, interacting with each other to improve both the sharpness of the localization and the details of the edit. By employing this synergistic approach alongside CSD, 3D Paintbrush is able to achieve new levels of detail using different text prompts on a diverse group of 3D objects. When asked to place a Barcelona jersey on a Lego humanoid, 3D Paintbrush not only accurately locates the torso area to color the jersey, but also accurately synthesizes the crest, down to the golden football and the red “plus” sign. 3D Paintbrush is also not limited to “plausible” or realistic edits. Though no one has seen shin guards on a giraffe or a cow with a turtle shell, 3D Paintbrush can place both of those in their respective locations with a high degree of precision.

Key to the method, the localization and stylization are learned simultaneously, interacting with each other to improve both the sharpness of the localization and the details of the edit. By employing this synergistic approach alongside CSD, 3D Paintbrush is able to achieve new levels of detail using different text prompts on a diverse group of 3D objects. When asked to place a Barcelona jersey on a Lego humanoid, 3D Paintbrush not only accurately locates the torso area to color the jersey, but also accurately synthesizes the crest, down to the golden football and the red “plus” sign. 3D Paintbrush is also not limited to “plausible” or realistic edits. Though no one has seen shin guards on a giraffe or a cow with a turtle shell, 3D Paintbrush can place both of those in their respective locations with a high degree of precision.

The official code is available on Github, where users can download the code along with a demo to see 3D Paintbrush in action. By inputting the text of a 3D object (e.g. “cow”) and the desired edit (e.g. “colorful crochet hat”), one can see how the computer infers the region without any manual selection by the user. As current changes to 3D models and avatars rely on manual region selection and editing by someone well-versed in 3D modeling tools, Decatur hopes to see this new technology applied to 3D modeling and video game animations. With 3D Paintbrush, the process would not just be entirely automated, but would also require no prior knowledge of 3D modeling software or specific expertise in the field, allowing for greater accessibility for novice or hobbyists to experiment in an area with growing potential.

“We hope that this research will allow everyone,” Decatur said, “regardless of technical background, to participate in the 3D modeling world.”

Building upon the 3D Paintbrush, Decatur plans to explore localized editing of the object’s geometry as well (e.g. adding horns on a cow) since currently 3D Paintbrush’s edits are constrained to the surface of the object. To learn more about his and the rest of 3DL’s research, you can visit their lab page.