Ten Papers at CHI 2021 Flourish Frontiers of HCI Research at UChicago CS

The 2021 edition of the ACM CHI conference — the premier gathering of human-computer interaction (HCI) researchers – is entirely virtual due to the ongoing COVID-19 pandemic. In a way, that format suits the field, which explores the myriad ways that technology can enhance human performance and experience while also examining the potential consequences of these innovations. An online conference, while not ideal, shows the power of HCI to preserve and create community in the face of a global crisis.

UChicago CS has firmly established itself within that community, with an emerging group of faculty and student researchers representing the many different corners of HCI. This year, an astonishing ten papers by UChicago CS authors were accepted to the prestigious conference, with one receiving a Best Paper Award (given to the top 1 percent of submissions) and three others receiving Honorable Mentions (top 5 percent). The full roster exhibits the breadth of HCI research at UChicago, covering everything from wearable technologies and virtual reality hardware to new data visualization tools and the security advice given to Black Lives Matter protesters.

As an accompaniment to the main CHI conference, UChicago CS organized its second annual “CHIcago” Symposium, giving students the chance to present their CHI work to each other and a broader audience in advance of the main event. You can watch the full video of the CHIcago Symposium here.

Electrical Influence

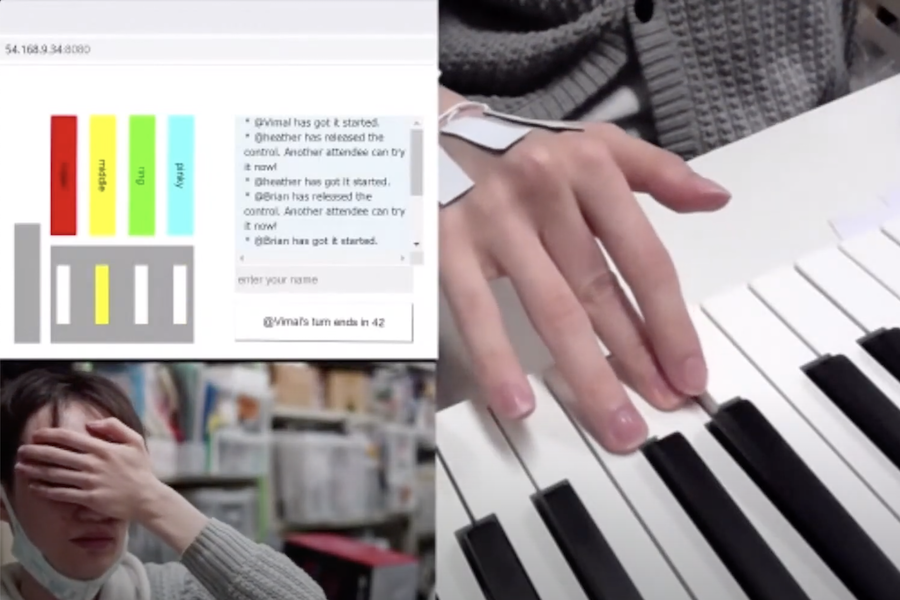

In the opening session of CHIcago, Akifumi Takahashi placed his hand at a piano and pasted a web address into the event’s virtual chat. From that webpage, volunteers located anywhere in the world could click buttons corresponding to Takahashi’s fingers, causing him to press keys in Japan, controlling his muscles and orchestrating a musical performance from thousands of miles away.

The jaw-dropping demo of Takahashi’s Best Paper-winning research — “Increasing Electrical Muscle Stimulation’s Dexterity by means of Back of the Hand Actuation” with Jas Brooks, Hiroyuki Kajimoto, and Pedro Lopes — set the tone for the event, with its inventive uses of technology for expanding human ability. Takahashi, who spent time as a visiting PhD student at UChicago in early 2020, adapted electrical muscle stimulation (EMS) by moving electrodes from the wrist to the back of the hand, allowing signals to achieve fine-tuned movement of the fingers for applications including playing guitar, drums, and piano.

(The project also won the People's Choice Best Demo Award at the conference)

Other CHI papers from Lopes’ Human-Computer Integration laboratory explored additional uses of electrical stimulation. A paper presented by Shunichi Kasahara from Sony CSL of research done with Lopes and Jun Nishida expanded upon previous work on the relationship between EMS-triggered actions, agency, and learning. The researchers found that training subjects on a fast motor task with a slightly-delayed EMS trigger that makes the subject feel like they themselves initiated the action produced lasting effects — these subjects remained faster at the task even when the electrodes were removed compared to those who had trained with “perfect” machine-assisted timing.

A different target of electrical stimulation was described by PhD student Jas Brooks, who developed a small device that produces “stereo smell” by activating the trigeminal nerve inside the nose. What we experience as smell is actually a combination of our olfactory system and this nerve, which detects sensations such as warmth of cinnamon or the sting of wasabi. Brooks’ device, designed with Shan-Yuan Teng, Jingxuan Wen, Romain Nith, Nishida and Lopes, fits inside the nostrils and can be programmed to activate the trigeminal nerve when it encounters smells such as gas or carbon monoxide, alerting people with impaired smell to hazardous conditions.

New Horizons for Security

A second live demo during the CHIcago symposium demonstrated yet another innovative use of electrical motor stimulation. With electrodes covering her forearm and sensors on her fingers, PhD student Zhuolin Yang triggered EMS “challenges” which provoked involuntary hand movements. The precise “signature” of those muscle responses allows Yang to unlock a security barrier, a new form of cybersecurity without passwords or PIN codes.

The approach, “User Authentication via Electrical Muscle Stimulation,” was presented by Yang and co-author Yuxin Chen from Ben Zhao and Heather Zheng’s SANDLab. Because every individual’s muscles respond differently to an EMS stimulation, their system creates a new type of biometric that can be used for identification. But unlike biometric scans such as fingerprints or retinal scans, there are millions of different EMS test patterns that can be created. [Read more about the system in a recent feature.]

One environment where security and privacy met real-world situations in 2020 was at the Black Lives Matter protests that swept the globe last summer. For many protesters, it was their first experience with mass protests subject to police surveillance, and they may not have been aware of how to protect their digital security and privacy.

In a paper that received an Honorable Mention, undergraduate student Maia Boyd led a team that also included undergraduate student Jamar Sullivan and advisors Marshini Chetty and Blase Ur in analyzing a collection of “safety guides” distributed to protesters through social media and news articles. Many guides recommended precautionary measures such as turning off fingerprint or face phone unlocking, using end-to-end encrypted messengers such as Signal, and avoiding identifiable information in social media posts.

However, in a subsequent survey of people who participated in protests, Boyd found that many disregarded this advice, feeling that it didn’t apply to them. The researchers will now take these findings and use them to develop new recommendations and interactive apps that personalize safety guidance for users, showing explicitly what information law enforcement could gather if they gained access to their phone.

Sensory Realism for Virtual Worlds

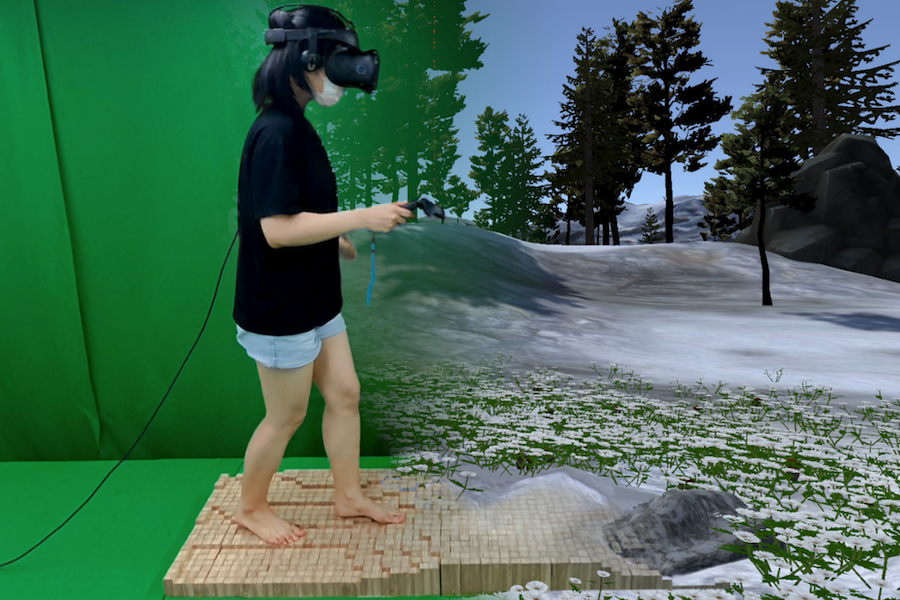

One of the great frontiers of human-computer interaction is making virtual experiences feel more genuine. While VR headsets have now reached consumer audiences, the immersion is still limited, engaging vision and sound senses but lacking in other sensory areas. Another group of papers addressed these limitations in both virtual and mixed reality, with new inventions that blend technology with genuine tactile stimulation.

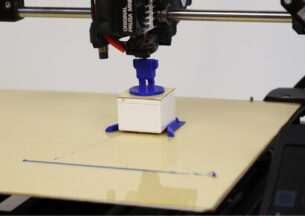

The Elevate simulator, presented by Kongpyung Moon of KAIST (and co-authored for CHI by UChicago PhD student Shan-Yuan Teng, Brooks and Lopes), is a floor of 1200 magnetically-controlled pins that produces changes in terrain. The structure is strong enough to stand or walk on, and can change its shape dynamically to emulate landscapes, stairs or stepping stones to mirror VR spaces…or create an ever-changing home mini-golf course.

Another sensory challenge for VR or augmented reality designers is creating a realistic fingertip touch experience. Small motors can be placed on the fingertip to provide pressure or texture when a user “presses” a virtual object, but in a mixed-reality setting, this setup interferes with touching real-world objects. Touch&Fold, an Honorable Mention paper presented by Teng, addresses this problem by automatically retracting the finger-mounted device when the user moves from virtual to real interactions, such as while following an AR tutorial for bike repair.

Haptic feedback has also been proposed for making everyday objects such as appliances, walls, and surfaces interactive for blind people or eyes-free activities. However, it’s impractical to outfit an entire house with electronics to generate even a simple vibration when touched. MagnetIO, a paper presented by PhD student Alex Mazursky and co-authored by Teng, Nith, and Lopes, solves this by creating interactive patches made from a soft, stretchable magnet. The user then wears a fingertip device that vibrates whenever it contacts one of these patches, which can easily be added to objects or devices.

Simpler Systems for Smart Homes and Data Visualization

Many people interact with today’s smart home devices through trigger-action programming (TAP), also known as “if this, then that” rules. Taken one at a time, these instructions can be simple, such as “If I fall asleep, then lock the front door.” When many rules are simultaneously active, though, the interactions between rules can cause undesirable effects that are confusing to untangle, making related TAP programs difficult to compare.

New approaches to comparison interfaces designed by PhD student Valerie Zhao and co-authored by Lefan Zhang, Bo Wang, Michael Littman, Shan Lu, and Blase Ur move beyond the typical structure used in software engineering, enabling users to compare differences in programs’ outcomes and high-level properties, not just the text of their rules. The researchers found that these methods help non-technical users better understand what TAP programs are doing. The research was the third UChicago CS paper to receive an Honorable Mention award from CHI reviewers.

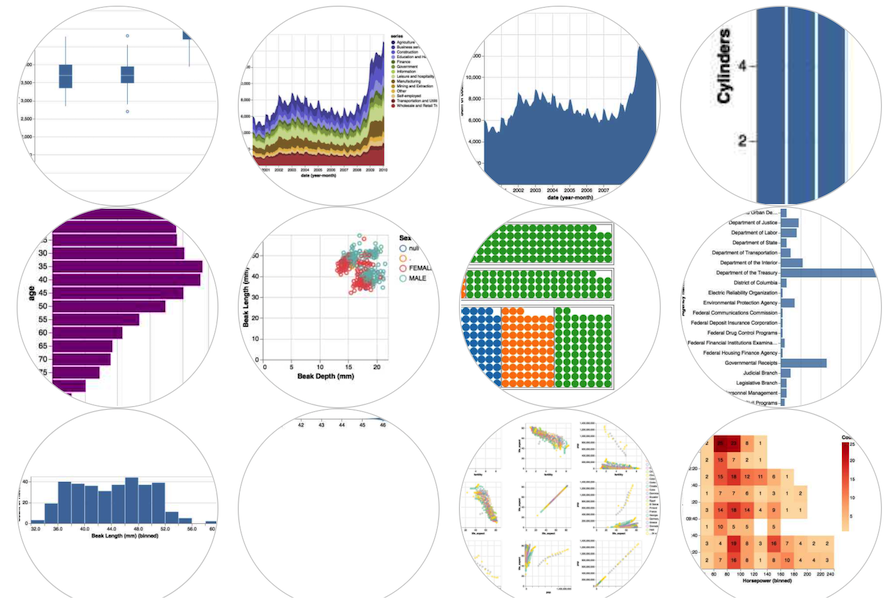

People unfamiliar with programming also struggle with creating visualizations from data, an increasingly desirable skill in many occupations. Andrew McNutt and Ravi Chugh’s CHI paper describes a visualization editor called Ivy that combines the benefits of popular data vis tools such as Tableau and Excel while lowering barriers to entry for inexperienced data wranglers. Ivy allows users to both explore their data visually in a variety of recommended templates, while also pulling from a user-created library of example visualizations from Vega-Lite.

Watch full video of the 2021 CHIcago symposium, or revisit the 2020 CHIcago symposium.