New research unites quantum engineering and artificial intelligence

Large-scale machine learning is already tackling some of humanity’s greatest challenges, including creating more effective vaccines and cancer immunotherapies, building artificial proteins and locating new companion materials for biocompatible electronics.

But two words have never been applied to this groundbreaking technology: Cheap or sustainable.

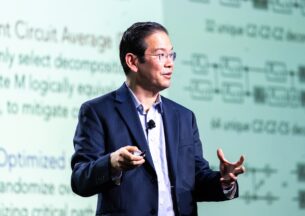

An interdisciplinary team including Prof. Liang Jiang and CQE IBM postdoctoral scholar Junyu Liu from the Pritzker School of Molecular Engineering at the University of Chicago, UChicago graduate students Minzhao Liu and Ziyu Ye, Argonne computational scientist Yuri Alexeev, and researchers from UC Berkeley, MIT, Brandeis University and Freie Universität Berlin hope to change that.

In a paper published this month in Nature Communications, the team showed how incorporating quantum computing into the classical machine-learning process can potentially help make machine learning more sustainable and efficient.

“This work comes at a time when there are significant advancements – and potential challenges – in classical machine learning,” Jiang said. “The quantum device could help to address some of those challenges.”

“This work comes at a time when there are significant advancements – and potential challenges – in classical machine learning,” Jiang said. “The quantum device could help to address some of those challenges.”

The team designed end-to-end quantum machine learning algorithms that they expect to be timely for the current machine learning community and, to an extent, equipped with guarantees.

“This is a convergence of the quantum field and advances in the field of artificial intelligence,” Alexeev said.

Big data, big costs

GPT-3, the initial learning model behind the popular ChatGPT chatbot, took $12 million to train. Providing the power for that massive computational task produced more than 500 tons CO2 equivalent emissions. Similar information has not been made public on GPT-3.5 and GPT-4 – the current models used to train ChatGPT – but the costs in cash and carbon are believed to be much larger.

This, as both machine learning and the environmental impacts of climate change continue to grow, is unacceptable.

Teaching a machine to learn requires uploading massive data sets – reading material for the digital student to sort through. The paper benchmarked large machine learning models from 7 million to 103 million parameters.

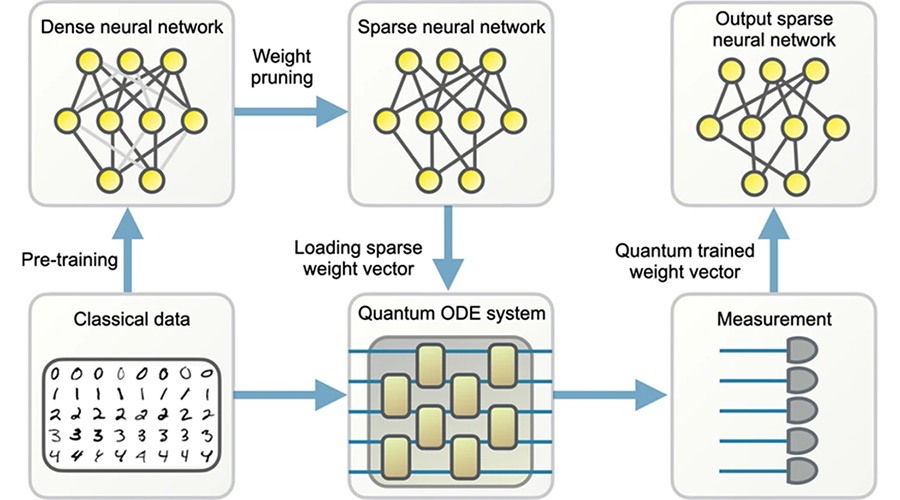

In classical machine learning, those data are uploaded and then “pruned” – compressed using algorithms that remove non-critical or redundant information to streamline the process. The streamlined data set is then downloaded and re-uploaded.

The more data reviewed, the better, more intelligent choices the algorithms produce. It’s a massive process that has required similarly massive processing power, and the accompanying cost and carbon emissions.

Quantum boosts

Incorporating quantum computing into the process after the initial prune could help cut the costs of that download and re-upload without sacrificing efficiency.

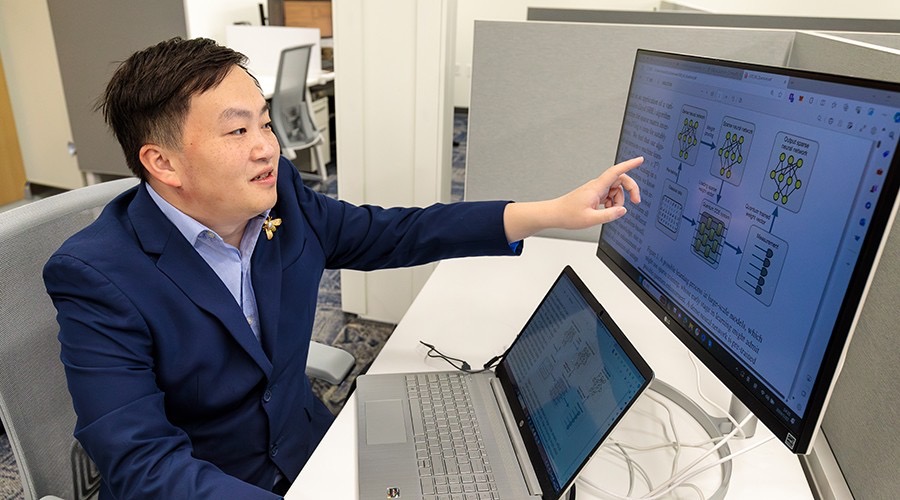

“We are trying to use this algorithm as a basic pipeline for solving some gradient descent equations used in machine learning models,” Junyu Liu said. “The innovation of this research was hybrid. Firstly, there are limitations about using quantum computing devices to solve some big data problems. One is the input output problem, which is that there’s a bottleneck about uploading the data for devices, and also downloading the data from the devices.”

The key was to make the model sparse enough that users will have low overhead uploading the data, essentially getting rid of that bottleneck.

“There are many interesting outcomes of this. It appears to be a generic results that you can apply it to multiple different machinery models,” said Junyu Liu, who also holds positions with UChicago’s Kadanoff Center for Theoretical Physics and Department of Computer Science and startups qBraid and SeQure. “It might be helpful in the long term for large-scale machine-learning applications, possibly even language processing.”

The team’s work “shows solidly that fault-tolerant quantum algorithms could potentially contribute to most state-of-the-art, large-scale machine-learning problems,” they wrote in the paper.

“We wanted to have a fresh look at the field and see to what extent quantum computers may help in training large-scale pruned classical networks,” said co-author Prof. Jens Eisert of Freie Universität Berlin. “We were surprised to see a lot of potential. We not only suggested the core idea, but went into painstaking detail of the scheme.”

The paper looks toward a future where quantum computing and large-scale machine learning go hand in hand.

“[M]achine learning might possibly be one of the flag applications of quantum technology,” the team wrote.

The paper’s other co-authors were Jin-Peng Liu of MIT and UC Berkeley and Yunfei Wang of Brandeis University.

Citation: “Towards provably efficient quantum algorithms for large-scale machine-learning models,” Liu, J., Liu, M., Liu, JP. et al, Nature Communications. DOI: 10.1038/s41467-023-43957-x