Jorge Nocedal (Northwestern) - Zero-Order Methods for the Optimization of Noisy Functions

Zero-Order Methods for the Optimization of Noisy Functions

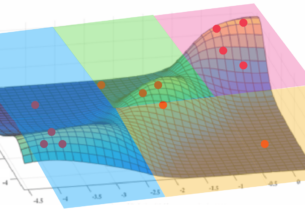

This talk presents a finite difference quasi-Newton method for the minimization of noisy functions. The method takes advantage of the scalability and power of BFGS updating, and employs an adaptive procedure for choosing the differencing interval h based on the noise estimation techniques of Hamming and More and Wild. This noise estimation procedure and the selection of h are inexpensive but not always accurate, and to prevent failures the algorithm incorporates a recovery mechanism that takes appropriate action in the case when the line search procedure is unable to produce an acceptable point. A novel convergence analysis is presented that considers the effect of a noisy line search procedure. Numerical experiments comparing the method to a model based trust region method are presented.

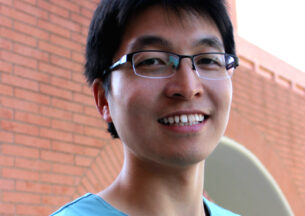

Jorge Nocedal

My research interests are in optimization and its application in machine learning and in disciplines involving differential equations. I specialize in nonlinear optimization, both convex and non-convex; deterministic and stochastic. There is a need for solving ever larger optimization problems, and throughout the years, I have developed algorithms that scale well with the number of variables, make judicious use of second-order information, and parallelize well. The motivation for my current algorithmic and theoretical research stems from applications in image and speech recognition, recommendation systems, and search engines.