Faculty Bill Fefferman and Chenhao Tan Receive Google Research Scholar Awards

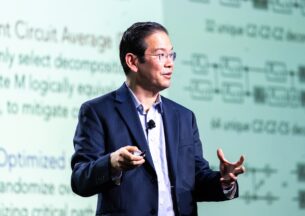

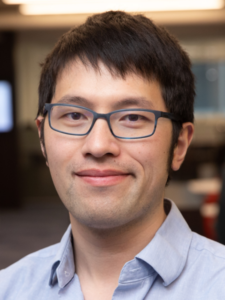

Assistant professors Bill Fefferman and Chenhao Tan each received grants from the Google Research Scholar Program, which funds world-class research by early-career computer scientists. Fefferman received one of five awards in the quantum computing area, while Tan was one of seven researchers funded for work in natural language processing.

Both researchers said that the grants would help accelerate their research goals in finding practical applications for today’s quantum computers and building new, explainable AI models for understanding and generating language.

Fefferman’s proposal, “Understanding the feasibility of practical applications from quantum supremacy experiments,” will explore the theoretical foundations of how current and near-future quantum computers generate certified random numbers. If confirmed, these algorithms would provide a valuable use case for quantum computing in cryptography, cryptocurrency, and other applications that require truly random keys for security.

Fefferman’s proposal, “Understanding the feasibility of practical applications from quantum supremacy experiments,” will explore the theoretical foundations of how current and near-future quantum computers generate certified random numbers. If confirmed, these algorithms would provide a valuable use case for quantum computing in cryptography, cryptocurrency, and other applications that require truly random keys for security.

“We’re in this really exciting era where we’re seeing quantum computers that for the first time can solve problems that are at the border of what can be computed classically,” Fefferman said. “The next step is to show that we can channel this power to solve something useful. Quantum computers, by their nature, are non-deterministic, and so they can produce random numbers. But the really interesting question is, how do you trust the quantum computer?”

Fefferman will explore that question by probing the theory underlying the random circuit sampling experiment that Google used to claim “quantum supremacy” – a task performed by a quantum computer that would be all but impossible for a classical computer — in 2019. A proposal by Scott Aaronson described a protocol to use that experiment for generating certified random numbers, but the theory requires more vetting to confirm its veracity.

“What Scott was saying is that today, with the power that Google already has with this device, you could in principle generate certified random numbers using the existing experiment, assuming a very non-standard conjecture from complexity theory.” Fefferman said. “My proposal is about giving strong evidence that this conjecture is true, which will increase our confidence that Google’s experiment can produce certified random numbers.”

Tan’s proposal, “Robustifying NLP models via Learning from Explanations,” will help protect these increasingly used AI systems against vulnerabilities and common mistakes by making their decisions more transparent. Though chatbots, digital assistants, and similar technologies have rapidly advanced in their ability to understand commands and questions and return accurate and realistic responses, they can still be tripped up by how requests are worded or contradictory information that a human would easily recognize.

Tan’s proposal, “Robustifying NLP models via Learning from Explanations,” will help protect these increasingly used AI systems against vulnerabilities and common mistakes by making their decisions more transparent. Though chatbots, digital assistants, and similar technologies have rapidly advanced in their ability to understand commands and questions and return accurate and realistic responses, they can still be tripped up by how requests are worded or contradictory information that a human would easily recognize.

“The overarching goal is about how to make the model more robust and less brittle against small changes in the input,” Tan said. “A lot of findings have shown that these models may not actually understand how to perform the tasks that they are asked to perform, but use some shortcuts or spurious correlations to give you the right answer, with the wrong reason.”

For his Google-funded research, Tan will explore new ways of training these models. Instead of the current practice of providing raw information and letting neural networks form their own associations, Tan’s approach would include explanatory information that guides models towards using logic to arrive at an answer. For example, the training data may identify the important sentences in a body of text, or include reasoning processes that contain more information about how the input should be used to answer queries.

If successful, these more robust models may not only be better at day-to-day tasks, but also harder to “game” by malicious users looking to break the system or artificially trigger a desired output, such as loan approval or bypassing a misinformation filter. Tan’s group will also explore new approaches on popular NLP models such as GPT-3, which generates realistic text.

“We think explanation can help the AI actually learn the underlying reasoning and avoid these critical mistakes against attacks,” Tan said. “You not only have the labels, but explain the labels, and then see how you can teach the model to learn from such explanations.”

In previous years, additional UChicago CS faculty have received Google Research Awards, including Andrew Chien for the study of green cloud computing, Junchen Jiang for research on streaming video analytics, and Yanjing Li for a project on “Resilient Accelerators for Deep Learning Training Tasks.”