Ken Nakagaki (MIT) - Designing Actuated Tangible UIs that blend the Joy of Physical Interaction, Robotic Actuation, and Digital Computation

Designing Actuated Tangible UIs that blend the Joy of Physical Interaction, Robotic Actuation, and Digital Computation

Squishing clay between fingers, twisting string into geometric shapes, tinkering with the gears of a mechanical toy — physical objects fill our world and invite us to touch and interact with them using our hands. These tangible actions and sensations evoke a sense of joy and curiosity that stimulates our ingenuity, imagination, and creativity. However, the mainstream of HCI focuses on Graphical User Interfaces (GUI) that do not provide the joy of interaction we experience with physical tools and materials as the information is trapped behind a flat-screen.

My research in Actuated Tangible User Interfaces (A-TUIs) envisions the emerging future of physical environments that incorporate dynamic actuation (e.g., shape change and motion) enabled by advanced robotic hardware, closed-loop control, and interactive computing technologies. These interfaces enrich our interactions with digital information and our physical environments through motion and transformation. My research has investigated interdisciplinary approaches across engineering, human cognition, and interaction design to advance A-TUIs’ capability to weave themselves into the fabric of everyday life. Such research includes:

– Shape-shifting surfaces that can dynamically render material properties — from clay to liquid — in response to touch.

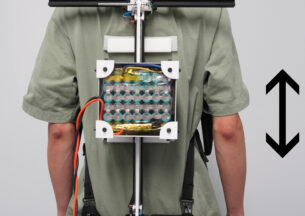

– Transforming robotic strings that can fit within hands, wrap around bodies and form expressive shapes.

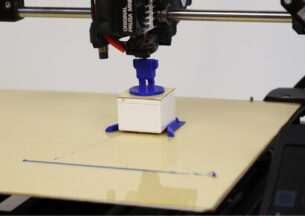

– Table-top swarm robots that actuate interchangeable external mechanical shells to afford new functions, forms, and haptic outputs.

In my talk, I will discuss my research approaches and relevant projects that reconceptualize the relationship between humans and machines. This new relationship of the future is rooted in the joy of tangibility.

Host: Blase Ur

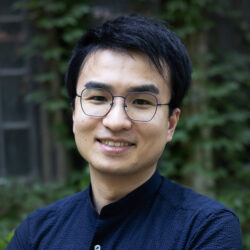

Ken Nakagaki

Ken Nakagaki is an interaction designer and HCI (Human-Computer Interaction) researcher from Japan. He joined the University of Chicago’s Computer Science Department as an Assistant Professor and founded the Actuated Experience Lab, or AxLab, in 2022.

His research has focused on inventing and designing novel user interface technologies that seamlessly combine dynamic digital information or computational aids into daily physical tools and materials. He is passionate about creating novel physical embodied experiences via these interfaces through curiosity-driven tangible prototyping processes. At AxLab, he pursues research in actuated and shape-changing user interface technologies to design the future of user experiences.

Before joining UChicago, he received his Ph.D. from the MIT Media Lab, where Prof. Hiroshi Ishii was his advisor. There he focused his research on Actuated Tangible User Interfaces. Ken has presented at top HCI conferences (ACM CHI, UIST, TEI) and led demonstrations of his work at international exhibitions and museums, including the Ars Electronica Festival and Laval Virtual. He has received numerous awards, including the MIT Technology Review’s Innovators Under 35 Japan & Asia Pacific, the Japan Media Arts Festival, and the James Dyson Award.