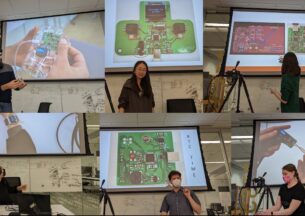

Rika Antonova (Stanford) - Enabling Self-Sufficient Robot Learning

Autonomous exploration and data-efficient learning are important ingredients for helping machine learning handle the complexity and variety of real-world interactions. In this talk, I will describe methods that provide these ingredients and serve as building blocks for enabling self-sufficient robot learning.

First, I will outline a family of methods that facilitate active global exploration. Specifically, they enable ultra data-efficient Bayesian optimization in reality by leveraging experience from simulation to shape the space of decisions. In robotics, these methods enable success with a budget of only 10-20 real robot trials for a range of tasks: bipedal and hexapod walking, task-oriented grasping, and nonprehensile manipulation.

Next, I will describe how to bring simulations closer to reality. This is especially important for scenarios with highly deformable objects, where simulation parameters influence the dynamics in unintuitive ways. The success here hinges on finding a good representation for the state of deformables. I will describe adaptive distribution embeddings that provide an effective way to incorporate noisy state observations into modern Bayesian tools for simulation parameter inference. This novel representation ensures success in estimating posterior distributions over simulation parameters, such as elasticity, friction, and scale, even for scenarios with highly deformable objects and using only a small set of real-world trajectories.

Lastly, I will share a vision of using distribution embeddings to make the space of stochastic policies in reinforcement learning suitable for global optimization. This research direction involves formalizing and learning novel distance metrics on this space and will support principled ways of seeking diverse behaviors. This can unlock truly autonomous learning, where learning agents have incentives to explore, build useful internal representations and discover a variety of effective ways of interacting with the world.

Speakers

Rika Antonova

I am a postdoctoral scholar at Stanford University at the Interactive Perception and Robot Learning lab. I received NSF Computing Innovation Fellowship for research on active learning of transferable priors, kernels, and latent representations for robotics. I completed my Ph.D. work on data-efficient simulation-to-reality transfer at KTH. Earlier, I completed my research Master’s degree at the Robotics Institute at Carnegie Mellon University, where I developed Bayesian optimization approaches for robotics and personalized tutoring systems. Prior to that, I was a software engineer at Google, first in the Search Personalization group and then in the Character Recognition team (developing open-source OCR engine Tesseract).