Steve Hanneke (TTIC) - Learning Whenever Learning is Possible: Universal Learning Under General Stochastic Processes

Learning Whenever Learning is Possible: Universal Learning under General Stochastic Processes

I will present a general theory of learning and generalization without the i.i.d. assumption, starting from first principles. We focus on the problem of universal consistency: the ability to estimate any function from X to Y. We endeavor to answer the question: Does there exist a learning algorithm that is universally consistent under every process on X for which universal consistency is possible? Remarkably, we find the answer is “Yes”. Thus, we replace the i.i.d. assumption with only the most natural (and necessary!) assumption: that learning is at least possible. Along the way, we also find a concise characterization of the family of all processes that admit universally consistent learners. One challenge for learning is that some of these processes do not even have a law of large numbers.

Host: Brian Bullins

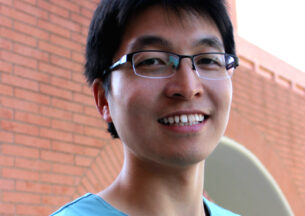

Steve Hanneke

Steve Hanneke is a Research Assistant Professor at the Toyota Technological Institute at Chicago. His research explores the theory of machine learning, with a focus on reducing the number of training examples sufficient for learning. His work develops new approaches to supervised, semi-supervised, transfer, and active learning, and also revisits the basic probabilistic assumptions at the foundation of learning theory. Steve earned a Bachelor of Science degree in Computer Science from UIUC in 2005 and a Ph.D. in Machine Learning from Carnegie Mellon University in 2009 with a dissertation on the theoretical foundations of active learning.