Samory K. Kpotufe (Columbia) - From Theory To Clustering

From Theory To Clustering

For Zoom information, subscribe to the Stats Seminars email list.

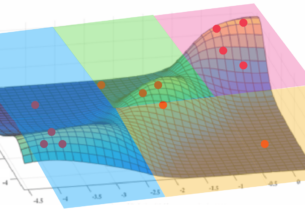

Clustering is a basic problem in data analysis, consisting of partitioning data into meaningful groups called clusters. Practical clustering procedures tend to meet two criteria: flexibility in the shapes and number of clusters estimated, and efficient processing. While many practical procedures might meet either of these criteria in different applications, general guarantees often only hold for theoretical procedures that are hard if not impossible to implement. A main aim is to address this gap.

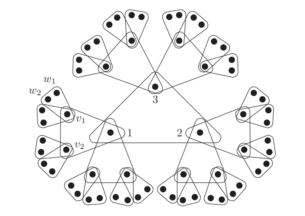

We will discuss two recent approaches that compete with state-of-the-art procedures, while at the same time relying on rigorous analysis of clustering. The first approach fits within the framework of densitybased clustering, a family of flexible clustering approaches. It builds primarily on theoretical insights on nearest-neighbor graphs, a geometric data structure shown to encode local information on the data density. The second approach speeds up kernel k-means, a popular Hilbert space embedding and clustering method. This more efficient approach relies on a new interpretation – and alternative use – of kernel-sketching as a geometry-preserving random projection in Hilbert space.

Finally, we will present recent experimental results combining the benefits of both approaches in the IoT application domain.

The talk is based on various works with collaborators Sanjoy Dasgupta, Kamalika Chaudhuri, Ulrike von Luxburg, Heinrich Jiang, Bharath Sriperumbudur, Kun Yang, and Nick Feamster.

Host: Rebecca Willett

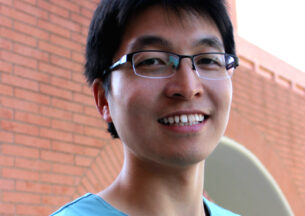

Samory K. Kpotufe

I work in statistical machine learning, with an emphasis on common nonparametric methods (e.g., kNN, trees, kernel averaging). I’m particularly interested in adaptivity, i.e., how to automatically leverage beneficial aspects of data as opposed to designing specifically for each scenario. This involves characterizing statistical limits, under modern computational and data constraints, and identifying favorable aspects of data that help circumvent these limits.

Some specific interests: notions of intrinsic data dimension, benefits (or lack thereof) of sparse or manifold representations; performance limits and adaptivity in active learning, transfer and multi-task learning; hyperparameter-tuning and guarantees in density-based clustering.