Cong Ma (UChicago) - Bridging Offline Reinforcement Learning and Imitation Learning: A Tale of Pessimism

Bridging Offline Reinforcement Learning and Imitation Learning: A Tale of Pessimism

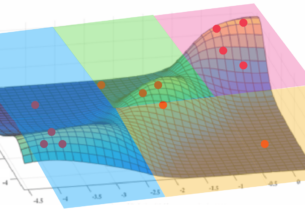

In this talk, I will focus on offline reinforcement learning (RL) problems where one aims to learn an optimal policy from a fixed dataset without active data collection. Depending on the composition of the offline dataset, two categories of methods are used: imitation learning which is suitable for expert datasets and vanilla offline RL which often requires uniform coverage datasets. However in practice, datasets often deviate from these two extremes and the exact data composition is usually unknown a priori.

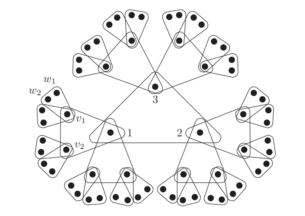

To bridge this gap, I will present a new offline RL framework that smoothly interpolates between the two extremes of data composition, hence unifying imitation learning and vanilla offline RL. The new framework is centered around a weak version of the concentrability coefficient that measures the deviation from the behavior policy to the expert policy alone. Under this new framework, we show that a lower confidence bound algorithm based on pessimism is adaptively optimal for solving offline contextual bandit problems over the entire data composition range. Extensions to Markov decision processes will also be discussed.

Contact Denise Howard (denise.howard@ttic.edu) for Zoom and in-person attendance details.

Host: Toyota Technological Institute of Chicago

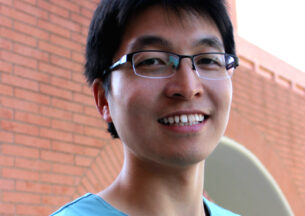

Cong Ma

Cong Ma is an assistant professor in the Department of Statistics at the University of Chicago. Prior to joining UChicago, he was a postdoctoral researcher at UC Berkeley, advised by Professor Martin Wainwright. He obtained his Ph.D. at Princeton University in May 2020, advised by Professor Yuxin Chen and Professor Jianqing Fan. He is broadly interested in the mathematics of data science, with a focus on the interplay between statistics and optimization.